TL;DR BOX

AI is moving from cloud-only compute into physical machines and edge hardware. The core shift is from smart software to autonomous devices that operate in the real world. Wave 2 drives a large hardware refresh across laptops, smartphones, and enterprise edge systems. By 2026, AI-capable hardware becomes the default standard, not a niche upgrade.

Wave 3 goes further by demanding heavy physical CapEx. AI scales into robots, autonomous vehicles, and drone fleets, changing where value is created. This phase explains why hyperscalers face margin pressure, how edge inference weakens cloud pricing power, and why product-focused companies and industrial suppliers matter more. It also shows how this transition pushes manufacturing activity into a PMI > 50 cycle and how to separate real demand from hype.

Key points

Fact: Gartner expects AI-integrated hardware to be industry standard by 2026.

Mistake: Assuming hyperscalers automatically win the AI era.

Action: Track orders, backlogs, and delivery times, not AI narratives.

Critical insight

Real AI adoption shows up first in factories and shipments, not model announcements.

Table of Contents

For the past decade, AI may be felt abstract to you because it lived in the cloud, it showed up as APIs, dashboards, and demos. Yes, you just clicked buttons, sent prompts, and waited for a response - This was AI version one.

But now, the fundamental is changing.

🚀 The Major Transformation of AI

AI infrastructure is no longer just a cloud-only story. It is becoming a real-economy buildout. AI now starts showing up in physical workflows, not just SaaS products.

It stops being “a feature” and starts being embedded directly into devices, machines, vehicles, and industrial operations.

It’s driven by a wave of technical breakthroughs in 2024-25 that sharply upgraded AI model quality while slashing latency, cutting cost per task, and making real-world deployment practical.

AI is now good enough, fast enough, and cheap enough to leave the lab and scale into reality.

Let me break those 4 points down:

Model quality crossed a threshold:

New AI models can now do things earlier models couldn’t. They don’t just produce text, they can actually reason. The latest reasoning models think through problems step by step, instead of guessing based on patterns.

This progress is measured using the GPQA benchmark, which is a set of extremely difficult, graduate-level science questions. The goal of GPQA is to test whether an AI can reason like an expert scientist or not

ChatGPT 5.2 shows strong results on this benchmark, which signals real gains in reasoning ability.

Beyond reasoning, models have also gained vision. Modern AI can understand images and video, not just written text, which allows them to read diagrams, analyze photos, and interpret live camera input.

AI can now even help control physical systems such as robot arms, drones, and smart home devices. It is moving beyond the screen and into the physical world.

Latency collapsed:

A major barrier to deploying AI at scale has been slow and unpredictable response times. This problem is especially serious when everything depends on cloud servers. For many real-world applications, this delay makes AI unreliable.

However, recent performance data shows that OpenAI’s GPT-5.2 responds much faster than GPT-5 and GPT-5.1 across different tasks.

This jump in speed did not just come from software, it was enabled by better hardware and strong model optimization, where both worked together to reduce latency.

2025 was a breakout year for AI hardware because Nvidia released new high-performance datacenter GPUs, while competitors like AMD’s MI300 and Google’s TPU v5 pushed performance forward.

The hardware gap narrowed quickly.

Another major shift was the rise of edge AI chips. These chips are designed to run AI inference locally, with much lower power use. They are faster and more efficient than traditional cloud setups.

As a result, many tasks that once took seconds now run in milliseconds. Some no longer need to send data to a distant datacenter at all. Everything happens closer to the device.

Cost per task imploded:

The cost of AI inference has dropped fast. Getting an answer from an AI model is now far cheaper than it was two years ago.

Recent research from Stanford shows that for a model with GPT-3.5-level performance, the cost per query fell sharply. It dropped from about $20 per million characters in late 2022 to around $0.07 by late 2024.

That’s more than a 280× reduction in just two years.

Better chips improved price and performance, and algorithms became more efficient.

And smaller, specialized models replaced one giant model for every task.

For businesses, this changes everything. Lower inference costs make AI practical in many more products and workflows.

Deployability changed:

Deployability comes from the combination of these three changes:

Higher model quality makes AI reliable enough to trust in real workflows, not just experiments or demos.

Low latency changes how AI feels in use.

Instead of behaving like a slow cloud service, AI now feels native and responsive, as if it were built directly into the product.

This is critical for user-facing and real-time systems.Lower inference costs make AI economically viable at scale.

Together, these shifts mark a turning point.

You remember our prediction that Bitcoin would return to $80K when the entire market believed BTC would hold $100K and continue moving up.

And we’ve shared high-potential tokens that are positioned for 200% growth in one month, while the broader market looks quiet and sluggish.

This series will be updated more frequently in the PRO edition moving forward.

Monthly Plan: Was

$29/mo→ Now $3.99/moAnnual Plan: Was

$199/yr→ Now $29/year 🤯

Unlock all PRO signals now 👇

🧭 The Physical AI Buildout Timeline

AI’s expansion into the physical world is not happening all at once. It is unfolding in three distinct waves. Understanding this timeline matters if you care about capital flows, supply chains, and investment positioning.

Wave 1: Cloud Commissioning Catch-Up

Hyperscalers are finishing what they ordered years ago, data centers are being built, GPUs are being installed. Power and networking capacity is coming online.

This wave matters, but it is not where the long-term structural shift happens.

Wave 2: Edge Device Upgrades

After cloud infrastructure is established, the next major shift involves moving AI processing directly onto user devices. This "edge upgrade" phase, Wave 2, transitions AI from remote cloud servers to local execution, resulting in superior speed, reduced costs, enhanced privacy, and easier scalability.

This wave is defined by a massive hardware refresh across three main categories:

Consumer Tech: Laptops and PCs equipped with dedicated neural processing units (NPUs).

Mobile: Smartphones purpose-built for Generative AI.

Enterprise Edge: Localized servers and sensors for industries like healthcare and manufacturing that require data to stay on-site.

According to Gartner, AI-integrated hardware will move from a specialized market to the industry standard by 2026, signaling a rapid and comprehensive global upgrade cycle.

Wave 3: Physical AI

In Wave 3, AI moves beyond pure compute to demand heavy physical CapEx. The focus shifts from "smart devices" to "smart machines" and eventually to entire autonomous fleets.

Yes, wave 3 outputs are defined by physical autonomy:

(Humanoid) Robots: Machines that interact with the physical world.

Autonomous Vehicles: Starting in restricted environments (mines, ports, campuses) before scaling to broad robotaxi deployments.

Drone Fleets: Large-scale use for inspection, mapping, security, and delivery.

This phase shifts AI infrastructure from data centers into robots, vehicles, and machines operating in the real world.

⚠️ Hyperscalers at a Crossroads

Most narratives assume hyperscalers are the safest AI infrastructure bet. The reality is more nuanced.

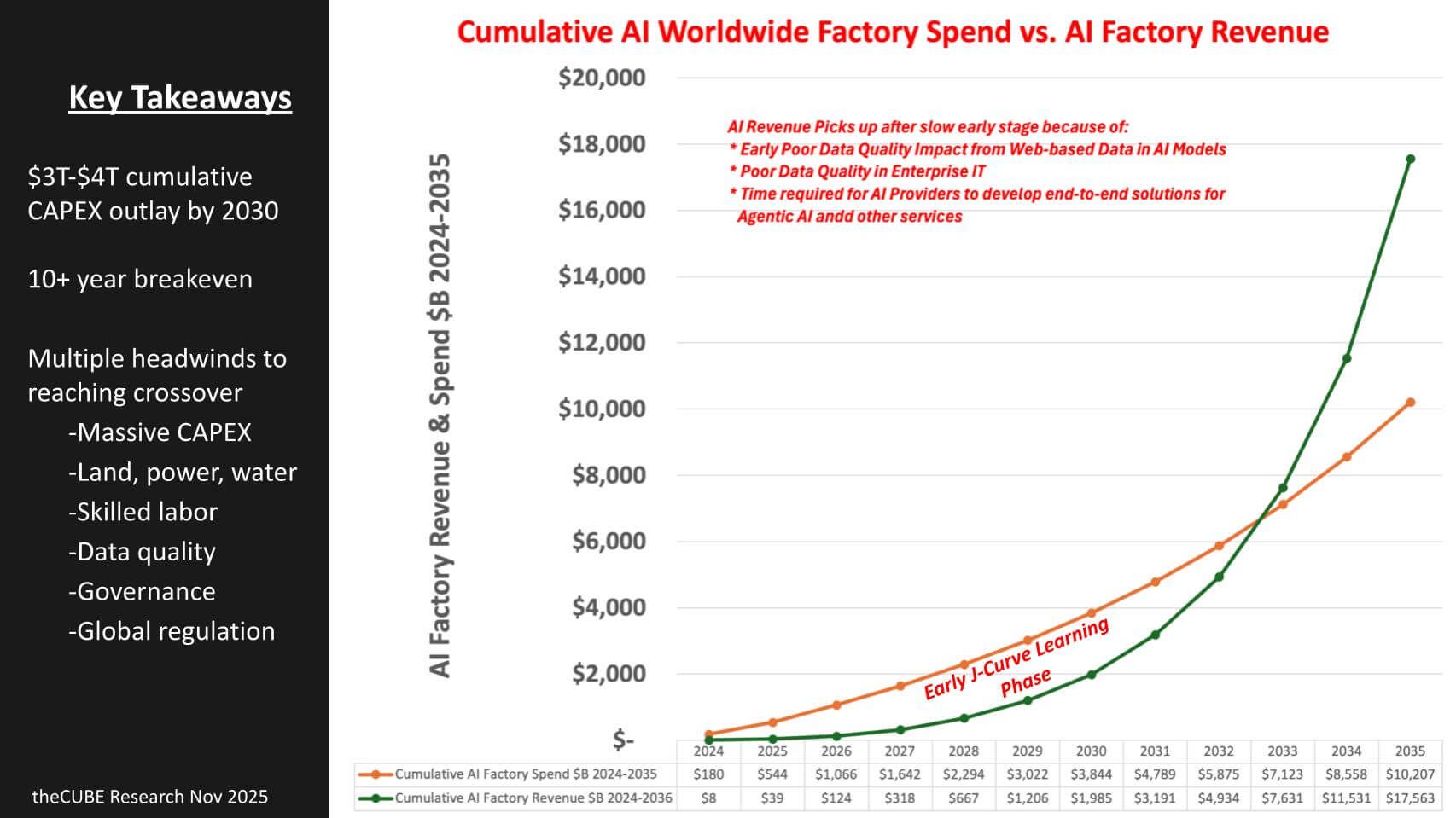

Timing gap: Hyperscalers spend capital upfront, but it often takes three to four quarters before capacity is fully monetized. Construction costs, depreciation, and operating expenses hit immediately. That creates margin pressure precisely when expectations are highest.

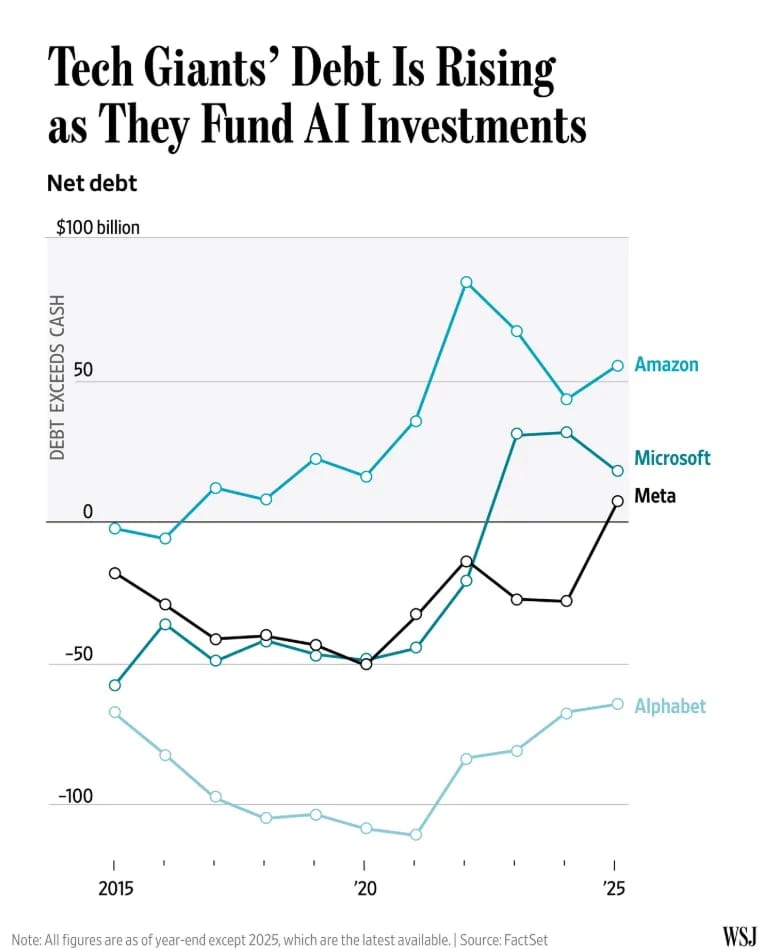

Debt risk: For the first time, hyperscalers are meaningfully financing data center expansion with debt. Higher interest expenses change the risk profile of businesses that were once viewed as capital-light.

Edge inference displacement: As AI becomes capable enough to run locally on phones, laptops, and enterprise hardware, demand for cloud-based inference may slow. It means pricing power weakens. This decentralizes AI infrastructure, moving it from hyperscale clouds to edge devices.

Companies like Microsoft and Google remain central to AI infrastructure. But the AI era does not guarantee linear returns for cloud giants. Distribution matters as much as scale.

💡 The Investment Hypothesis

AI investment is evolving from digital infrastructure (software and data centers) into a tangible, "real-world" buildout as it integrates into physical hardware. In other words, AI infrastructure is becoming physical, distributed, and capital-intensive.

This shift creates two primary investment categories:

1. Product Winners (The Implementation Layer)

These are companies successfully embedding AI into consumer and industrial hardware, such as devices, vehicles, and robotics. Success is measured by physical shipments, user adoption, and recurring revenue, rather than just backend compute spending.

2. The Manufacturing Supercycle (PMI > 50)

The mass production of AI-enabled equipment triggers a broad industrial recovery. As factories ramp up production to meet demand, we see:

Increased New Orders: Expanding backlogs and longer delivery times.

Expansionary PMI: A shift above 50, signaling a healthy, growing economy.

Earnings Leverage: Cyclical suppliers benefit as industries restart capital expenditure (CapEx) and restock inventory.

Product Winners

Apple $AAPL ( ▲ 2.24% ):

While critics claim Apple has missed the AI race, their strategy focuses on driving hardware upgrades and ecosystem spending rather than winning the cloud war.

Apple’s strategy relies on three core levers:

Siri as an Autonomous Agent: By 2026, Siri will evolve into a tool that understands personal context and performs actions across apps. Leveraging its existing 1.5 billion daily requests, this transformation could trigger exponential usage growth.

On-Device AI Ecosystem: By opening its local foundation models to third-party developers, Apple provides an "on-device brain." This ensures faster performance, offline capability, and superior privacy compared to cloud-based solutions.

Low-CapEx Monetization: Unlike "hyperscalers" spending billions on GPU fleets, Apple is adopting a capital-light model. By partnering with providers like Google (Gemini), they convert massive infrastructure risks into manageable operating costs.

Apple is now positioned to monetize AI through services and device sales without the volatile depreciation risks of building massive data centers. This approach fits the AI era, where efficiency and ecosystem control outperform brute-force compute.

Tesla $TSLA ( ▲ 2.39% ):

In contrast to Apple, Tesla’s path to victory lies in becoming the mass-manufacturer of real-world robots that automate both mobility and labor.

Tesla is executing this through three core pillars:

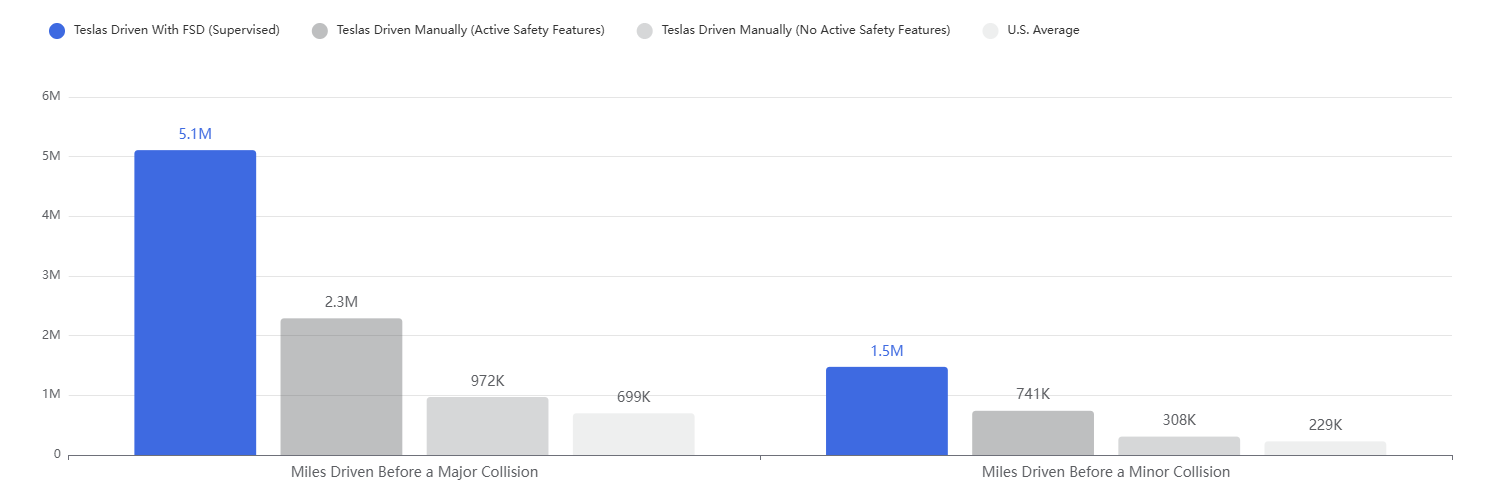

Full Self-Driving (FSD) as a "Robot Brain": Tesla treats FSD as a scalable software stack that improves via continuous updates. By shifting from one-time car sales to high-margin, recurring software revenue, every Tesla effectively becomes a software-upgradable robot.

Robotaxi Service Fleet: This shifts the business model from selling cars to selling rides. By removing the human driver, Tesla can drastically lower costs per mile and increase margins. After successful driverless pilots in 2025, Tesla aims to scale purpose-built "Cybercabs" starting in late 2026, with forecasts suggesting a fleet of 1 million vehicles by 2035.

Optimus Humanoid Robot: Tesla is repurposing its FSD computer vision and AI for Optimus to target the global labor market. Tesla’s unique advantage is the ability to test these robots within its own "Gigafactories." Scaled production is expected by 2027, with analysts projecting billions in revenue from robot sales alone by 2035.

While Apple wins through a "Light CapEx" digital ecosystem, Tesla wins through "Heavy CapEx" physical automation, turning AI into a fleet of autonomous machines.

PMI > 50 Cycles

When AI integration in devices (Apple) and machines (Tesla) triggers mass adoption, demand shifts into the real economy, manifesting as factory orders and expanding backlogs.

This creates a classic PMI > 50 environment, signaling a broad manufacturing expansion. That expansion is a defining economic signal of the AI era moving into the physical world.

The Real-Economy Beneficiaries:

In this cycle, the market rewards suppliers of essential physical components, including:

Connectivity & Networking: Fiber, optical interconnects, and high-speed edge infrastructure.

Storage & Memory: The capacity layer required for local AI inference.

Power Solutions: Distributed and on-site power for industrial uptime.

Industrial Automation: Sensors, actuators, and rugged edge computers that run "smart machines."

Historical Precedents:

History shows that physical infrastructure buildouts consistently drive the PMI above 50:

The 1960s Auto Era: It sparked a massive expansion beyond just cars, building out everything from road networks and fuel stations to repair ecosystems and high-volume manufacturing.

The 2012 - 2019 Smartphone/4G Cycle: The shift to smartphones drove massive capital flows into new handsets, chips, and cell towers, creating a continuous cycle of upgrades as network standards evolved.

Both of those physical infrastructure cycles drove the PMI strongly above 50.

Identifying winners in an expanding manufacturing cycle (PMI > 50) is challenging because many prominent "Wave 1" stocks are already trading at peak valuations with the upside largely priced in.

To find untapped potential, investors should look deeper into the supply chain at the "boring-yet-essential" enablers that facilitate the physical rollout of AI.

The Filter for Success:

From Narrative to Numbers: Monitor management shifts from discussing AI as a general "trend" to citing specific, growing order volumes.

The "Classic Tells": Verify this transition by looking for rising order intake, expanding backlogs, longer delivery lead times, and upward revisions in financial guidance.

These indicators confirm that the PMI cycle is turning and that a company is experiencing real-world demand rather than just market hype.

Rate us today!

⚠ This newsletter is for informational purposes only and should not be considered investment advice. Traders should conduct thorough research, understand the risks, and carefully evaluate their decisions before investing in cryptocurrency.

If you’re interested in other topics and want to stay ahead of how Crypto is reshaping the markets, from whale strategies to the next major altcoin narrative, you can explore more of our deep-dive articles here:

*indicates premium insights available to Pro readers only.